What We Find in the Sewers

Our ancestors once spread their excess effluent on their fields; now we mine it for vital molecules.

This article concludes Issue 07. See you next month for the launch of Issue 08!

The sewer is the conscience of the city. Everything there converges and confronts everything else.

— Victor Hugo, Les Misérables

In his book What is Life? Schrödinger called humans “entropy machines.” Extracting order from our environment to compensate for our disorder, he said, is what defines us as living beings. The same claim could be made of defecation. We strip the world of the nutrients and substrates we need, leaving only waste behind. Yet even though shitting is one of the few universal human experiences, the topic as a whole suffers from euphemism.

What happens in the loo, restroom, garderobe, privy, house of office, necessarium, Gongfermor’s domain, Jakes, close stool, Spanish house, necessary house, water closet, temple of convenience, or house of harkening is seldom discussed, let alone what happens to the material deposited therein. We in the developed world rarely pause to consider the vast and complicated system designed to make the removal of waste a swift and clean experience.

Historically, however, this has not been the case. Nor is human effluent the only component of the vast stream of waste produced by any large collection of people. Our ancestors had to live alongside animal dung and dirt, household rubbish, and the surface runoff from washing, cleaning, or agriculture. This experience has been so unpleasant that the constant need to dispose of, use, treat, or simply avoid the accumulation of waste has been a major driver of innovation. Indeed, rules to limit and control waste disposal have often been cited as the first forms of urban regulation, a rallying point for centralizers and organizers.

At first glance, then, the course of sewage history would seem the triumph of waste removal and sequestration. As cities grow larger, however, the need for innovation and greater resource efficiency has made sewage worthy of further consideration. Scientists and governments have begun to reexamine what valuable resources and data can be found in this brown gold. The Covid-19 pandemic demonstrated the vast potential of wastewater-based epidemiology, while research into the gut microbiome’s influence on human health and behavior continues to burgeon. Engineers have likewise begun to see sewage not simply as a waste stream but as a resource to be mined for valuable elements or even for energy.

Ancient World

The problem of sewage has existed ever since humans began congregating in large numbers. Whereas nomadic peoples could simply leave their waste behind, settlers face the problem of waste build up and removal. For early farmers, however, sewage was not a curse but a blessing.

While prior wisdom held that manure wasn’t used as fertilizer until the Iron Age and Roman times, there is recently discovered evidence that Neolithic farmers spread dung on their fields as early as 8,000 years ago.1 The limited manure supply was used strategically to nourish more temperamental cereals and pulses such as Einkorn wheat, peas, and lentils, while hardier hull barley crops were left to fend for themselves on more marginal soils.

The use of manure can also be seen in excavations of the Bronze Age town Ugarit in northern Syria, where many of the houses contain a stone-lined pit, containing “potsherds, bones, and flints … [and] human and animal excrement.” Paleoanthropologists have suggested that waste was composted with organic matter in such pits in order to prevent the degradation of nitrogen, phosphorus, and potash by either fungi or their leaching away by slowing down decomposition. Furthermore, in arid climates, manure and excrement oxidize rapidly, losing their fertilizing power. Composting them in covered pits therefore prevented exposure to sun, air, and moisture, helping to retain key nutrients that could later be used to enrich agricultural soils.2

Waste was also used to heat dwellings. When mixed with straw or other organic matter, dung was a convenient fuel in areas where wood was scarce.3 This particular application was significant enough to merit mention in the Old Testament when God says to Ezekiel, “I will let you bake your bread over cow dung instead of human excrement” (Ezekiel 4:15).

As towns and populations grew, however, they often produced a glut of waste. And what could not be spread around the fields, burned in homes, or otherwise made use of, needed disposal. This typically meant that the surplus excreta was simply swept outside.

Excavations of Tel Ashkelon in Israel found a higher occurrence of organic matter in the waste strata outside of buildings than in them, suggesting a sweeping of internal spaces into the street. This waste, mixed with silt from walls and roofs as well as urine (the Hebrew Bible often defines a man as “one who urinates against a wall”), was then trodden down, causing the street level to rise and requiring inhabitants to step down into their houses. Entrances eventually became so low that the doors had to be knocked in and releveled with the street. This accumulation is part of the reason that ancient cities sit atop layer upon layer of ruins; the oldest substrata have literally been buried in waste.

Though a less frequently considered component of sewage treatment today, the drainage of standing water was vital in Iron Age towns. Stagnant water provided ideal breeding grounds for mosquitoes, pests, and diseases. Moreover, water that lingered on surfaces gradually weakened building foundations and caused erosion of mud or dung-based walls, increasing the risk of damage or collapse. Trenches in the middle or at the side of roads were used to channel this water out of the city gates. Excrement instead tended to be carried to the fields. Ancient Jerusalem, for example, had a specific Dung Gate for this purpose.

In South Asia, the Indus Valley Civilization (2600–1900 BC) developed a much more sophisticated system. Cities like Mohenjo-daro in modern-day Pakistan boasted grid-planned streets, standardized fired-brick buildings, and above all, elaborate covered drainage networks, serving populations of 30,000–40,000 people.

Another city massive enough to necessitate central planning was Rome, which, with a population of one million in the 2nd century AD, produced an estimated 500 tons of waste daily. The cost, manpower, and inefficiency of attempting to keep a city of this scale clean using manual waste removal made large-scale infrastructure vital. Clean streets and bodies became a hallmark of civilization during the Empire, and Rome developed a sophisticated hygiene culture. In fact, it has been argued that the fashion for baths was the major motivation for the building of the aqueducts and the sewers of Rome.

The water of the baths themselves, which Marcus Aurelius described as an “offensive mixture” of “oil and sweat, dirtiness and water,” needed constant replenishing. Yet in gravity-fed systems that flowed directly into a river, sewers were in danger of silting up unless the water kept flowing. The lack of effective valves and plumbing, therefore, made Roman sanitation extremely water-intensive.4 To keep up with demand, Agrippa diverted water from surrounding springs and funneled it down the aqueducts into Rome. Rome thus sat on multiple rivers: the natural Tiber and the vast artificial flow of the aqueducts. The Romans themselves would have recognized this parallel, as the Cloaca Maxima, the vast major Roman sewer in which Rome’s detritus accumulated, was described by Strabo as large enough for a river to flow through.

Into this sewer, the Romans threw animal dung from carts, blood from slaughterhouses and butchers, waste products from industry, dirt and mud, and abandoned rubbish. Nero was even rumored by Suetonius (admittedly not Nero’s biggest fan) to enjoy going out at night, killing men he came across, and throwing their bodies into the sewer.

Removing human excrement was, however, a secondary purpose of Rome’s vast sewer system. There are very few examples of private latrines in Rome, which carried the risk of pipes becoming blocked, bad smells, vermin, and built-up gases causing explosions. Instead, historians have assumed that most Romans used the limited public facilities, though “there is abundant evidence showing that many people relieved themselves in streets, doorways, tombs, and even behind statues.”

Ancient Rome is where we witness the emergence of specialized industries to turn waste into an important resource. Urine, for example, when left to ripen, was prized as a source of highly alkaline ammonia that acts as a degreaser and disinfectant, breaking down fats. Large pots were placed around the city in which urine was collected and sold for use in tanning leather or as a detergent. This was such a major industry that Vespasian famously taxed those who bought urine, telling his fastidious son Titus, “Pecunia non olet” — “Money does not stink.”

Despite the relative hygienic sophistication of Roman civil engineering, Romans still suffered from diarrhea, dysentery, infectious hepatitis, salmonella, and many other illnesses that came from contact with raw sewage. This no doubt contributed to the average life expectancy being only between 20-30 years. Nevertheless, no other city came close to Rome’s sanitary accomplishments for over a millennium.

Early Modern Europe

While the Romans largely saw excrement as a nuisance, in Early Modern Europe, it became vital for the safety of the realm. The same ammonia used in tanning could also be oxidized by bacteria to produce potassium nitrate (KNO₃), or saltpeter, a key ingredient in gunpowder. Increasing use of guns and muskets by the Tudor kings, coupled with limited understanding of saltpeter production, made the English crown highly dependent on imports from the Continent. By 1558, as much as 90 percent of English saltpeter was imported, according to historian David Cressy. The fact that such a crucial military resource could be cut off drove many countries to seek local sources.

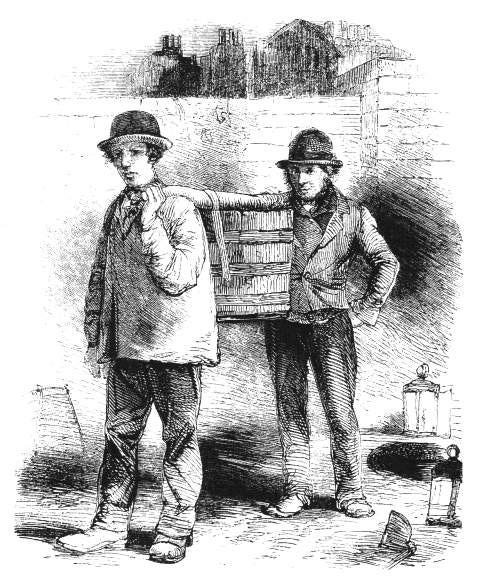

The European wars of Religion (1522-1714) made Continental saltpeter supplies even less reliable, leading Queen Elizabeth to copy the French system of granting royal warrants, known as droit de fouille (“the right to dig”), which allowed agents to excavate any soil suspected of containing nitrates without having to compensate the landowner. This gave rise to a new specialized and despised profession, the saltpeterman.

These saltpetermen were commissioned to seek out sources of nitrogen in the form of buried organic matter, usually from collecting sites such as stables, dovecotes, and cellars. Any property owner who refused them access risked prosecution. They were even authorized to commandeer carts to transport saltpeter and were exempt from any taxes or tolls on English roadways.5

As a result, saltpetermen were widely unpopular, frequently harassed, or even beaten by locals, who sometimes resorted to bribery to keep them off their land. Yet the need for saltpeter was so great that such complaints were dismissed, and by the end of Queen Elizabeth’s reign in 1603, the new system furnished up to half the kingdom’s annual needs.6

By the time of the Restoration of the Merry Monarch King Charles II in 1660, the country’s saltpeter needs were met by imports from India, which allowed the British system of saltpetermen to wind down. Its ready colonial availability meant that domestic saltpeter was no longer a resource vital for national security.

Saltpeter was so significant in the maritime trade that, according to the standard practices of East India captains, 16 percent of a ship’s cargo space was allocated to it. Sometimes, saltpeter was shipped in heavy bags, but more often it was shoveled loose into the hold, serving as both cargo and ballast with a strong sewage-like odor. Aboard trading ships sailing in tropical conditions, the smell came to be euphemistically known among sailors and travelers as “the smell of the ship.”

However, as the need to mine sewage for saltpeter decreased, the population — and its waste — surged. London grew from 50,000 residents in 1500 to over one million in 1800, and increased its use of horses, creating a volume of sewage never before seen.7

It was, however, the invention (by Sir John Harrington, not Thomas Crapper) and widespread adoption of the water closet in the 19th century that forever changed Britain’s sewers.

While traditionally, feces and urine were deposited in a cesspit that would be emptied by night-soil men, the water closet made use of a raised cistern to flush any leavings away. Between 1820 and 1844, the number of water closets in London grew tenfold. This surge in usage dramatically increased water consumption, from an average of 160 gallons per household per day in 1850 to 244 gallons by 1856, with total daily consumption rising from 43.3 million to 80.8 million gallons.

The effect was a far higher volume of liquid being disposed of into cesspools. To speed up the absorption of liquid into the ground, cesspools were dug deeper, into the first sand layer (London being largely built on impermeable clay). While this reduced the volume of waste in the cesspit, it also allowed human waste to enter the groundwater, permeate wells, and saturate the ground. In 1815, to cope with the increase in wastewater, households were permitted to connect their drains to London’s sewers, which had historically only been covered drainage channels for rainwater and street runoff that ran into the Thames.

Both the sewage-polluted Thames and overflowing cesspits contributed to endemic waterborne diseases, such as dysentery and typhoid fever. The poor also experienced high rates of infant mortality and persistent outbreaks of tuberculosis, smallpox, and scarlet fever, which made the life expectancy of an urban worker in 1838 only around 25 years. Cholera was an even greater source of terror, killing more than 6,000 people over a few months in the London outbreak of 1832.

The deaths caused by sewage pathogens energized a new school of sanitation reformers who pioneered the emerging field of medical statistics to improve the lives of the urban poor.

The foremost sanitation campaigner at this time was Edwin Chadwick. A disciple of social reformer and jurist Jeremy Bentham, Chadwick pioneered the use of systematic surveys to support his social campaigning and railed against traditional local landowner-led parish government, which he saw as unprogressive. After his reforms of the English Poor Law that replaced generous welfare programs for the poor paid for by local taxpayers with harsh centralized workhouses, Chadwick turned his attention to bad sanitation, which he argued inflated the cost of the Poor Law by making workers sick and creating cholera widows and orphans.

One of Chadwick’s major innovations was using the new field of statistics to support his arguments. The field was founded on the idea that collecting data about the state (hence the name) would allow for a scientific method of government. Chadwick turned to William Farr, a statistician at the new General Register Office, to help him write the report that would make his name and inaugurate the field of public health.

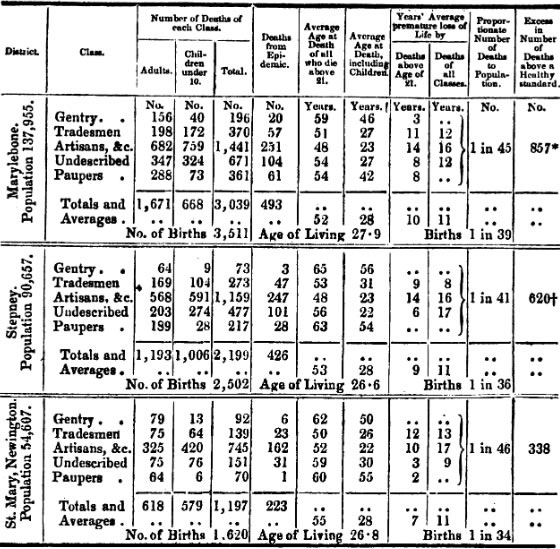

Published in 1842, The Report on the Sanitary Condition of the Labouring Population proved, among many things, that poor parishes with undrained streets had repeated outbreaks of typhus and cholera and lower life expectancies than the drained, wealthy areas.

More important still was the rhetorical and pragmatic power of Chadwick’s descriptions of London’s sewage problem, described as “a vast monument of defective administration of lavish expenditure and extremely defective execution.” In addition to such blistering critique, he laid out how the terrain could be harnessed to build more effective sewers, emphasizing the need for a constant downward gradient to prevent the buildup of silt and sediment and make waterborne sewage both practical and economical.

The 1842 Report was an enormous success. More than 20,000 copies were sold. Legislators also passed the Metropolitan Buildings Act of 1844 in response, which required new buildings to connect their drains to sewers, and the Cholera Bill of 1846, which extended this requirement via the ability of the Privy Council to compel any behavior they saw fit to fight epidemics, the first time that the Crown accepted responsibility for the health of its subjects.

The Report set off a nearly two-decade-long battle to build a sewer system for London. The major hurdle was how to fund the system. The London urban area had at this point expanded well beyond the traditional City of London, and each borough, parish, and vestry jealously guarded its rights and privileges. A series of Royal Commissions struggled to balance the reforming zeal of Radicals such as Chadwick and Evangelical philanthropists such as the Earl of Shaftesbury, with the unwillingness of local potentates to give up control, and traditionalists who saw the centralizing instinct of the Radicals as un-English. For 10 years, six Commissions sat in limbo, collapsing and reforming as they tried to come up with a sewer design that would both achieve their goals and not cost the London rate payers too much.

Balancing these competing interests was an incredible challenge. Joseph Bazalgette, future architect of London’s modern sewers, landed his role of Chief Engineer after his superior, Frank Forster, died from overwork. The difficulties he experienced are attested to by his obituary, which bitterly notes: “A little more kindliness out of doors, and a more general and hearty support from the Board he served, might have prolonged a valuable life, which as it was, became embittered and shortened, by the labours, thwartings, and anxieties of a thankless office.”

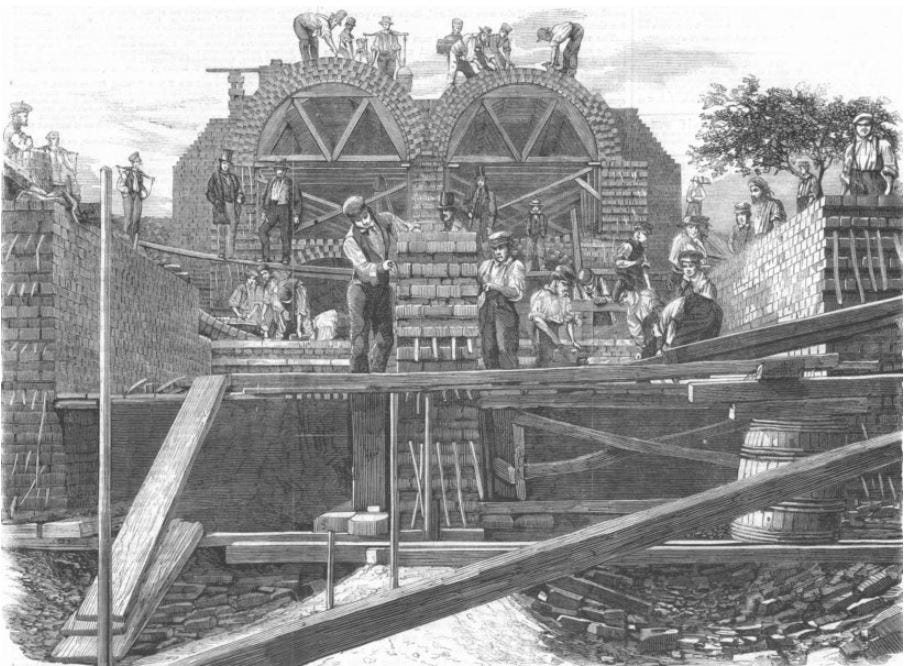

The stalemate was only broken after an unusually hot and dry summer struck London in 1858. The smell of the Thames, which had for 43 years been dubbed “the great sewer of London,” became, according to Charles Dickens, “most head-and-stomach-distending.” This was what became known as the “Great Stink.” Most MPs working in the Houses of Parliament, which sit on the river at Westminster, believed at the time that bad smells were the cause of diseases such as cholera and typhoid (the miasma theory of disease). They found the stink of the nearby Thames so alarming that they rapidly removed restrictions on Bazalgette, granting his new Metropolitan Board of Works the right to raise money via government-backed bonds and eliminating both Parliamentary and local government vetoes.8

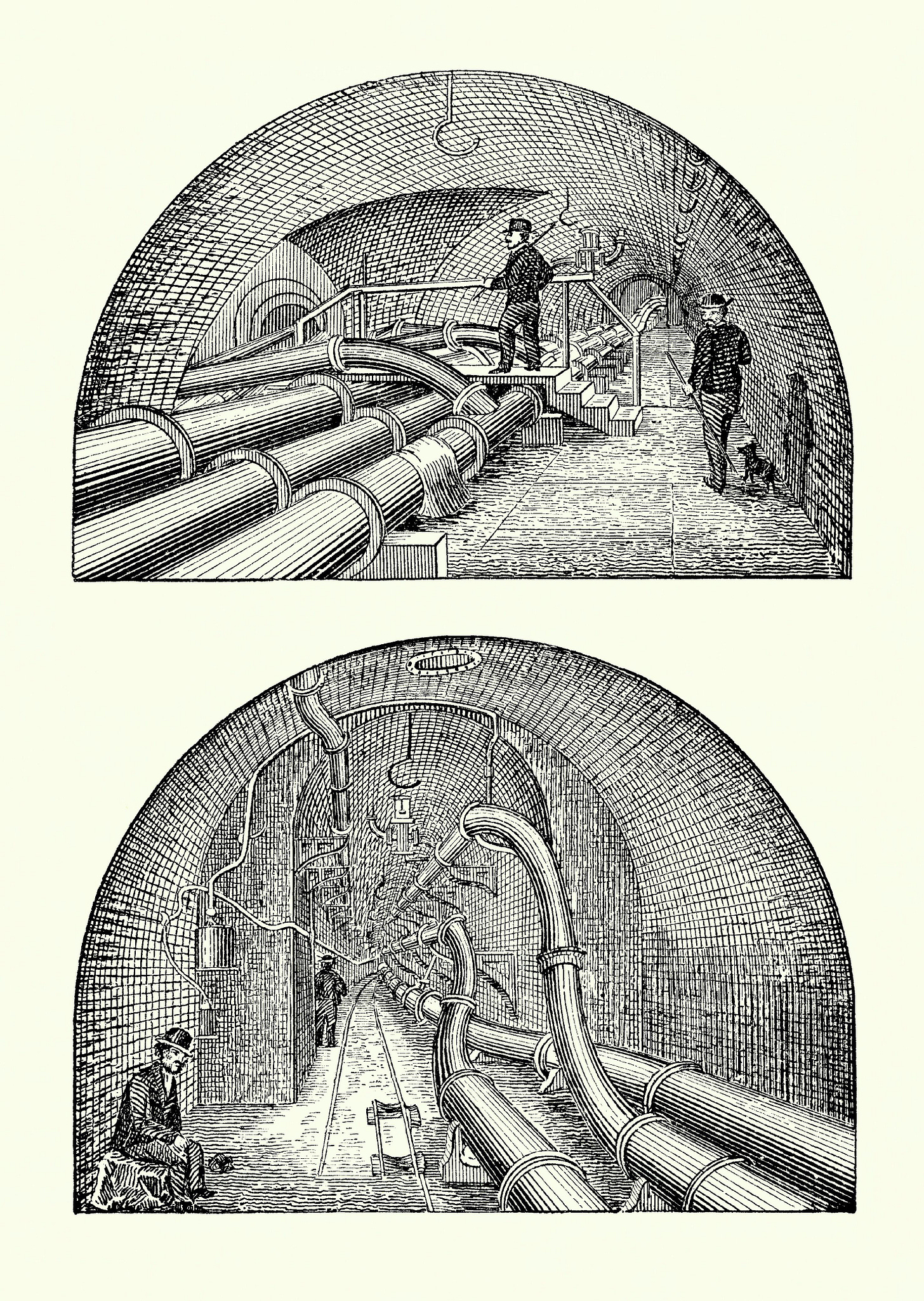

The construction of the sewers was a vast and impressive undertaking. The main sewers on each side of the river were separated into high, middle, and low elevation tunnels, connected by huge pumping stations. This was crucial to prevent the issues that had plagued historic sewers, which had not maintained a consistent drop, leading to sedimentation and blockages. These tunnels also had to be designed to fit around preexisting subterranean obstacles, such as London’s canals and underground lines. These new sewers brought wastewater to the banks of the Thames, where intercepting sewers, built under the new Embankments, captured the sewage before carrying it downstream to outflow points beyond the city.

Bazalgette deserves the most credit for his anticipation of further population growth. In planning the sewers, Bazalgette had to make assumptions about the future population of the area to accommodate their water needs and drainage requirements. He designed his system for a population 25 percent greater than the one then living in London, using 30 gallons of water per day. By the time of Bazalgette’s death, in 1891, however, the population had grown to be 20 percent greater than his prediction and was using as much as 90 gallons of water a day. Despite this, his design allowed it to meet the greatly expanded demand and, with limited upgrades, cope with a population of over 10 million in the 21st century.

Public Medicine

Even as the sewers were being built, the views of those who had campaigned for them began to change.

Chadwick and other radicals had campaigned for sewers largely to alleviate the miasmas they thought resulted in disease and increased the cost of Poor Law payments to local rate payers, seeing medical prevention as a cost-saving exercise. However, by 1854, Chadwick had been pushed out of public life due to his abrasive and autocratic ways, specifically, his high-handed campaigning for both the use of glazed pipes over brick sewers and his insistence on transporting sewage into the countryside to be sold.

His work was taken up by a new generation of statistically trained doctors who began by challenging the miasma theory. Their predecessors’ notions were not based purely on superstition, however. William Farr had published influential maps showing that elevation above sea level was inversely correlated with cholera deaths, seeming to give credence to the notion of tainted air.

The first to disprove the role of miasmas was Dr. John Snow. An English physician and early anaesthetist, Snow had experienced a cholera outbreak while an apprentice.9 Cholera, which had arrived in Britain in 1831 from India, caused vast panic in Victorian England. It seemed to strike at random, defying treatment and leading to death from diarrhea and dehydration.

Snow first outlined his theory that cholera was caused by a waterborne element in 1849, but the medical and scientific establishment dismissed him. He lacked microbiological evidence, and the miasma theory aligned more neatly with prevailing prejudices (and existing statistical methods) that disease originated from moral failings among the poor.

Snow’s opportunity to bolster his theory came during the 1854 cholera outbreak in Soho, when over 600 people died in a matter of days. Using what we’d now call epidemiological methods (documenting cases and where they occurred), he traced the outbreak to the Broad Street water pump. He persuaded the local council to remove the pump handle, and shortly afterward, the outbreak subsided. Though this didn’t prove his theory, it offered a compelling case study.

Despite being a firm believer in the miasma theory, Farr was one of the few to take Snow’s work seriously, and he began statistically examining cholera deaths by water supplier, contrasting the Southwark and Vauxhall Company, which drew water from a polluted part of the Thames, with the Lambeth Waterworks, which drew water from a cleaner upstream source. His analysis, published in the mid-1850s, showed that cholera deaths were dramatically higher among customers of the dirtier supply, supporting Snow’s theory with hard data.

The final proof came during the 1866 cholera outbreak, the last major one in London. Farr studied the spread of the disease and found that it matched the distribution of water supplied by the East London Waterworks Company, which had failed to adequately filter water from the contaminated Lea River. This time, there was no doubt, and Farr publicly endorsed Snow’s waterborne theory.

Though John Snow died in 1858, just before his ideas gained full acceptance, his work, supported and expanded by Farr’s statistical authority, laid the foundation for modern epidemiology.

Farr’s epidemiological conversion also took place at the same time as a moral one that would shape the field of public health. Given that disease was the product of one’s environment, he argued, it was immoral to allow unsanitary living conditions to condemn the poor to ill health. Improving public health, which had been for Chadwick simply a means to reduce the costs of the Poor Law bill, became for Farr, and the new field of public medicine, a goal in and of itself.

Modern Sewage Treatment

While the sewers removed sewage from London, they simply moved the problem elsewhere. When a pleasure steamer sank downstream of the city near the sewage outflows in 1878, it caused a public outcry. Witnesses to the sinking described the river as black, greasy, and foul-smelling, as the sewage had not yet been carried out to sea. Contemporary reports suggested that passengers who jumped into the water were overcome by the filth. One chemist surmised that many passengers died not from drowning but from “uncontrolled vomiting.”

Engineers and scientists began to realize that simply transporting sewage away from population centers wasn’t enough; it had to be treated before being discharged.

Engineers aiming to treat sewage had three major forms of pollution to contend with: pathogenic, organic, and nutrient. Pathogenic pollution consists of bacteria, parasites, and viruses that can cause harm to humans and animals, but can be treated with chlorine. Organic and nutrient pollution, on the other hand, are found in much greater quantities in sewage and can’t simply be killed, requiring treatment instead.

The danger of organic pollution (carbon-containing compounds) is that microorganisms in water consume oxygen as they break down the compounds. If their consumption rate of oxygen, or Biological Oxygen Demand9 (BOD), is greater than the oxygen replenishment rate, it creates hypoxic conditions that suffocate marine life. Nutrient pollution causes similar BOD fluctuations. Here, compounds such as phosphorus and nitrogen-containing ammonia can promote sudden, massive algal blooms. These blooms can kill river-dwelling life by releasing phycotoxins. When the blooms themselves die off, the BOD spikes as microbes decompose their biomass.

A turning point in industrial waste treatment occurred when we realized we could harness the very microorganisms that were causing us grief. By isolating the treatment process from the environment, we could protect aquatic biomes from BOD fluctuations, while allowing the microbes to carry out metabolic conversions that could decompose the effluent.

An early attempt at industrializing this approach was done through “trickle filtration,” which was developed in the 1890s. In trickle filtration, wastewater is purified as it passes over substrate, such as rocks or gravel, that provides a large surface area for microorganisms to grow into a biofilm. As wastewater flows past the biofilm, the microorganisms break down organic matter and remove pollutants through processes such as nitrification (turning ammonium into nitrate) and denitrification (turning nitrate into nitrogen gas). The treated water is then collected at the bottom and directed to a secondary clarifier, where any remaining solids are removed.

While trickle filtration effectively allowed for the large-scale return of treated water back into rivers, the need for a high surface-to-volume ratio for the growth of biofilms meant that the tanks required for trickle filtration were fairly large. In light of this, engineers began to investigate other methods.

This culminated in the discovery of the activated sludge process by British chemists Edward Ardern and William T. Lockett in 1913. While experimenting with aeration, Ardern and Lockett noticed that bubbling air through wastewater in a bottle increased, rather than cleared, its murkiness. They found that adding oxygen to the untreated sewage allowed clusters of beneficial aerobic bacteria to grow, which formed dense biological masses (known as “flocs”) that actively consumed organic waste. When this mass was allowed to settle, it could be reused in a new batch of sewage, where it would speed up the process exponentially and rapidly lower BOD.

They had essentially stumbled upon a self-perpetuating, hyper-efficient biological treatment system. Compared to the commonly used trickle filter method, where aeration occurred from splashing or diffusion, the activated sludge process made use of mechanical aeration to facilitate more constant mixing and oxygen. This, in turn, allowed for much higher biomass concentrations that could more quickly break down matter.

However, one of the major benefits of activated sludge treatment, its ability to remove nitrogen and other nutrients, turned out to be its major weakness — it removed all kinds of nutrients from wastewater, not only the harmful ones. Venting nitrogen as a harmless gas (N2) rather than letting it accumulate in waterways might prevent river pollution, but that nitrogen is then lost to the atmosphere. Such over-stripping thus eliminated materials that could otherwise be recovered or recycled for use in agriculture and industry.

It is no coincidence that the decline of sewage reuse coincided with the rise of industrialized agriculture. The development of the Haber-Bosch process, also in 1913, enabled the mass production of synthetic nitrogen fertilizers, reducing reliance on organic waste. But this created an ironic cycle: cities now spent vast amounts of energy removing nitrogen from sewage, despite its former value as fertilizer. With new ways to fertilize farmland, waste was no longer an economic asset but an environmental problem.

While the Haber-Bosch process spurred a Green Revolution and a resultant population explosion, it was then, and still is today, immensely costly in terms of both money and energy. It currently consumes roughly two percent of global energy, and around 70 percent of the ammonia made is used in agriculture. Although 65 percent of the nitrogen in fertilizer is lost from runoff, as much as 80 percent of dietary nitrogen is excreted and is then denitrified in municipal wastewater plants, which themselves use ~3-5 percent of a city’s energy. This means that at least 19 percent of all ammonia produced yearly is lost as gas via sewage denitrification.

Nevertheless, the advantages and utility of the activated sludge process were so significant that activated sludge treatment sites proliferated rapidly across the world. Great Britain and Germany developed pilot plants, while the very first full-size plant was built in Houston in 1916.

The challenge of treating wastewater appeared solved. Activated sludge could reduce nutrient, pathogen, and BOD levels sufficiently to allow for waterway release and do so in small, compact plants that could be located in or near major cities. This marked the beginning of the peak period of the avoidant attitude to sewage, an attitude that continued into the Sixties. Waste was moved, as rapidly as possible, away from humans and detoxified. Public health officials saw little to no utility in sewage, which was best kept out of sight and out of mind.

Mining Waste

However, since the 1960s, rising concerns surrounding declining natural resources and national resilience have caused many to reexamine the resources flushed away with our sewage. Many of the contaminants removed by sewage treatment, such as phosphorus and nitrogen, are valuable in their own right. Revisiting the perspective of the Roman tanners and launderers or the British saltpetermen, countries and companies have been developing techniques to harvest waste, so-called “urban mining.”

Energy is one such potentially valuable resource. Dairy farms, which produce large amounts of a slurry containing cow manure, leftover feed, and straw, have started harnessing the potential of anaerobic digestion to convert sewage into energy. Anaerobic digestion uses colonies of bacteria that mimic the contents of a ruminant’s stomach, in which organic matter is broken down to produce methane, a gas that can be burned as a carbon-neutral fossil fuel. One of the early references to this phenomenon was recorded in 1764, when Benjamin Franklin wrote to an English chemist about how he managed to set a small lake alight in New Jersey.10 This method was used by Britain and other European countries during the Second World War for heating and lighting when coal was scarce. It remains a viable technology, producing around 3.5 percent of Britain’s yearly electricity, but the low power density of sewage means it struggles to compete with other renewable energy sources such as solar.

As well as being a source of energy, sewage can be a valuable source of water, especially in countries at risk of drought. While joint surface water and solid waste systems have been criticized as overly energy-intensive to act as a source of water, Singapore sees this water as a resource and a way of reducing its water dependence on Malaysia. Its NEWater program uses membranes with ultrasmall pores, which, at very high pressures, only allow water molecules to pass through, filtering out all contaminants from their wastewater. Israel and Australia have also embraced similar approaches to address water scarcity.

Even more unusual resources might be present in sewage. It has been estimated that $13 million worth of minerals, including $2.6 million in dietary gold and silver,11 could be found in the sewage sludge of a million-person city each year. While no companies have yet tried to extract these precious metals, the EU passed a law in 2017 requiring wastewater treatment plants to recover most of the phosphorus in waste to reduce dependency on Moroccan and Russian fertilizer mines. Struvite reactors for phosphorus reclamation are found across Europe and the U.S. The largest plant is in Chicago and produces 9,000 tonnes per year.

Finally, sewage can serve as a feedstock for biomass production. Rich in nitrogen, phosphorus, and carbon, microorganisms grown on sewage have found a variety of uses. One of the most speculative is the growing of algae on sewage-derived nutrients to produce biodiesel, a carbon-neutral fuel extracted from the oils produced by microalgae. Long touted as a potential resource of the future, the All-gas plant in Spain generates 100 tonnes per hectare per year using sewage nutrients and the sun to manufacture a fuel that can power a car. Similar plants have been built in Alabama, and one in Holland makes bioplastics.

A Dutch company has even tried to close the nitrogen loop completely by using microbes to convert sewage directly into protein. While poo burgers remain a food of the future, these proteins have current applications as fertilizers and animal feed, potentially reducing the need for the Haber-Bosch process.

While all these sewage-derived resources hold promise, the greatest resource in our waste may be something even more central to our modern world — information.

Data derived from our sewage can provide an unvarnished look into the health and behavior of a population. Viruses, little more than protein-wrapped bundles of DNA, are well suited to detection by wastewater-based epidemiology (WBE), but the first successful example of gleaning information from sewage came from an investigation of illegal drug use. This was first definitively used in 2005 to detect the presence of cocaine and its metabolized derivatives in the River Po.

In 2019, London researchers testing sewage outflows detected not just the presence of cocaine, but its metabolized form, proof that it had passed through human bodies. Concentrations were high enough to indicate widespread, frequent use across the city, and the drug had entered the Thames in quantities large enough to affect the local wildlife. A follow-up study found that London’s freshwater eels, long known for their resilience, were exhibiting signs of cocaine-induced stress and hyperactivity.

When it comes to WBE, though, polio was the first major medical success. As only 1 in 200 of those infected with the polio virus experience paralysis, WBE has allowed detection of emerging outbreaks without waiting for the appearance of symptoms and even guided the choice of the correct vaccine. In 2022, this technique proved successful in London when a mutated vaccine-derived outbreak was detected before any cases of paralysis occurred, triggering an immunization campaign among children to prevent community transmission.

One of molecular biology’s most powerful diagnostic tools, the polymerase chain reaction, is used to detect viruses such as polio. The harsh environment of the sewers causes viral genetic materials to break into fragments that are too short for traditional whole-genome sequencing, making detection challenging. But known viruses can still be detected using primers, short sequences of DNA that bind to characteristic sequences in already sequenced viruses. Detecting unknown pathogens, however, is much trickier.

To get around this problem, researchers use metagenomic methods to sequence as many short sequences as possible before stitching the them into a single genome by finding pairs of sequences with similar, overlapping genetic codes. However, this is computationally heavy and can only suggest potential genomes, rather than definitively identifying novel viruses.

Covid-19 did much to catalyze the further development of WBE, and many countries initiated WBE programs to predict hospital admissions. This required correlating the amount of detected viral sequences in wastewater to patient numbers and deaths.

This surge in the global employment of WBE pointed to a need for standardization. In order to be useful both nationally and internationally, samples needed to be collected from the sewage pipe and measured in a uniform manner.

The U.S. WBE Covid response initially struggled with the piecemeal nature of wastewater sampling as different jurisdictions, universities, and companies set up their own monitoring schemes. In addition, CDC employees struggled to acquire hospital-level admission data, having to rely instead on state-level data, which made the building of a predictive tool for hospital admissions difficult and ineffective.

Switzerland, on the other hand, had a highly effective sampling method. Its standardized collection and measurement all took place at a single central facility, allowing for ease of comparison of quantitative data. This made it able to detect Covid variants before they appeared in the patient population.

The British company Faculty AI also built the highly successful UK Covid-19 Early Warning System that incorporated wastewater samples alongside calls to hospitals and positive Covid tests. These factors were integrated into a machine learning model that combined data at the national, regional, and hospital levels to provide accurate three-week predictions for admissions for individual hospitals.

The most successful nations were those that issued clear, standard operating procedures for sampling and analysis, and provided easy routes for the reporting of data to a central repository, but allowed local bodies to determine where they sampled.

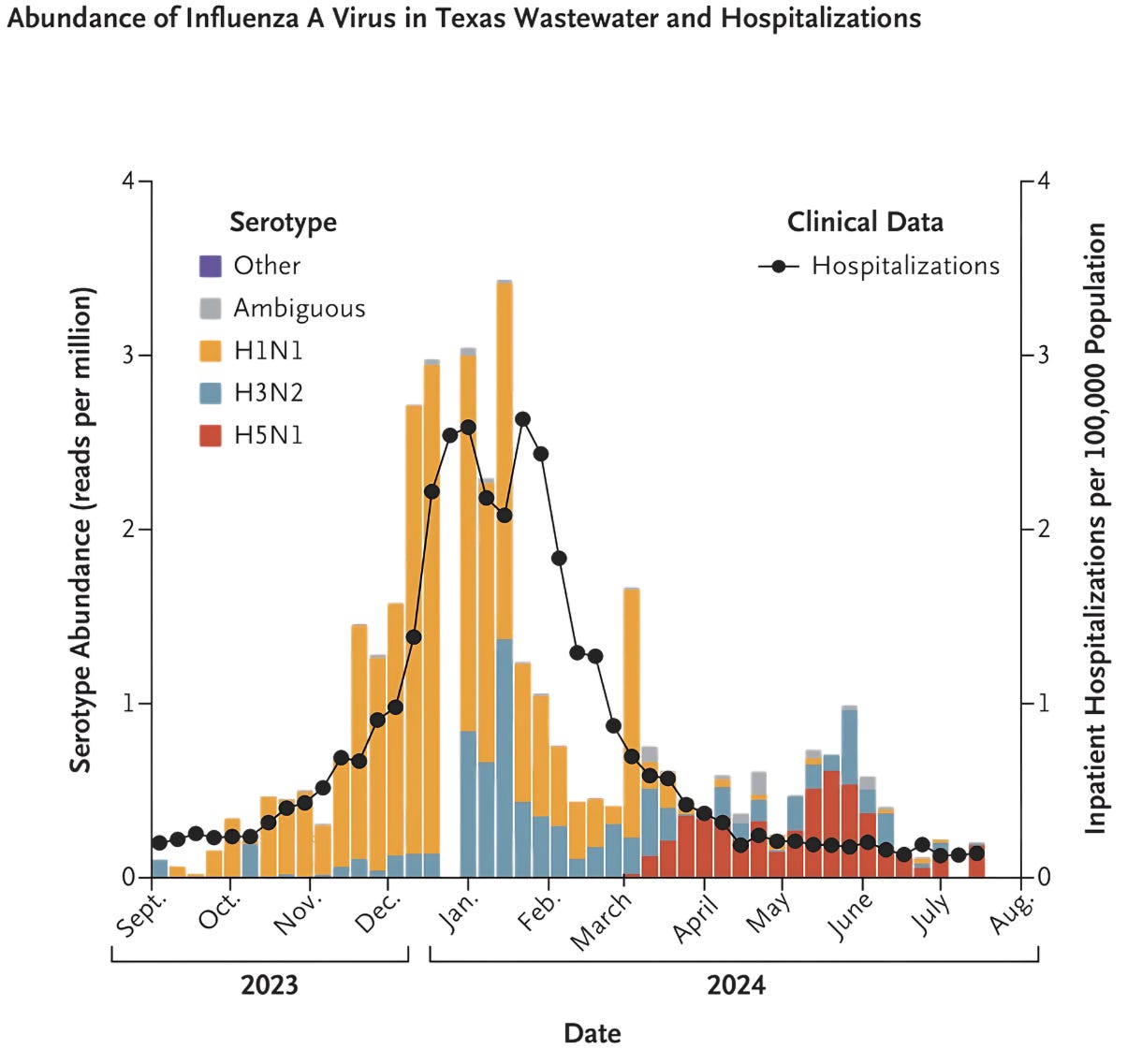

Many of the lessons learned in the U.S. from the poor handling of Covid wastewater data were applied to the H5N1 dairy cattle outbreak in Texas in 2024. Researchers monitoring wastewater for hundreds of viruses were able to detect H5N1 in urban areas before there were any human cases and before it had been detected by other means.

Ultimately, many of the difficulties of WBE programs stem from the challenge of extracting data from an environment that degrades both information quality and resolution. An effective WBE program would bring sampling closer to the neighborhood, hospital, house, or even individual, disaggregating data to provide greater resolution on the health of individuals. Doing this will require a change in perspective, seeing excrement as simply another data stream that we can read to understand community health.

For much of human history, sewage was not merely waste. Early societies saw its agricultural and energetic value, using it to fertilize fields, fuel fires, and, later, extract critical chemicals like saltpeter. As cities grew and the toll of disease mounted, a new philosophy emerged: health over harvest. Sewage became something to be hidden, exiled, and forgotten.

But today, in a world of increasingly strained resources, rising populations, and stagnant growth, we are beginning to revisit the effluent that flows beneath our feet. Recycling nitrogen, phosphorus, and carbon is no longer just environmentally responsible; it's economically and strategically essential. Attention to sewage is even more vital as the data within our wastewater — viral fragments, pharmaceutical residues, and metabolic byproducts — emerges as a powerful public health tool.

This evolution marks a return to an older logic, updated by modern science: that what we flush is not just filth, but information, energy, and opportunity. Bazalgette’s achievements were wonders for his time, but now, sewage is a resource too valuable to waste. The next great civil engineer will be the one able, paradoxically, to bring sewage back into human life, enabling it to be assayed and mined, restoring its value to those who made it.

Calum Drysdale is a PhD student in London, building low-cost automated bioprocess optimization robotics, and is also the co-host of the Anglofuturism podcast.

Cite: Drysdale, C. “What We Find in the Sewers.” Asimov Press (2025). https://doi.org/10.62211/26he-98yt

Lead image by Ella Watkins-Dulaney.

While Neolithic farmers were unaware of the mechanisms behind fertilization, the deliberate use of manure suggests a sophisticated understanding of its effects.

Mesopotamian and Levantine cities had approximately 3-4 kilometers of manured land around their walls, an area limited by how far one could economically carry dung from the city.

In modern Iraq, flattened dung cakes called Muttal are still used as fuel. In the UAE, camel dung is used to fuel industrial cement production, replacing coal, though it takes double the weight of dung to produce the same energy.

The Roman measure of water flow was a unit of area (that of the diameter of a pipe) not of volume per unit of time. It was assumed that when the intermittent flow of the aqueducts arrived, it would immediately flow down pipes. A larger pipe gave a wealthy customer a greater fraction of this flow.

Floors of churches were other common sources of nitrogen, as long services meant women would urinate beneath the pews, creating major deposits of nitrogen-containing urea.

Because of how detested they were, this system of saltpeter extraction came at significant social cost which would plague the successors of Queen Elizabeth, contribute to the Civil War, and even the Royalist loss.

A further problem was that the growing city made transporting sewage to the ever-more distant fields more expensive, reducing the market for the contents of cesspits. It was reported in 1842 that the cost of emptying a cesspool was one shilling (a workman’s wage was about three shillings per day), and many families had their rents collected weekly. Poorer families often had no choice but to let sewage build up.

Stephen Halliday in his book The Great Stink of London: Sir Joseph Bazalgette and the Cleansing of the Victorian Capital provides an authoritative overview of the bureaucratic and political wranglings that preceded the building of the sewers that are unfortunately beyond the scope of this piece. Much of the opposition stemmed from the fact that parish and vestry rate payers were reluctant to hand over money and power to a new body. Others feared that the new Metropolitan Board of Works, the first ever urban administration that the London metropolis had had would grow overly powerful and even come to rival the Imperial Parliament.

He was, for his time, an eccentric: a vegetarian and teetotaller. Notably, his drink of choice was distilled water, which he saw as sufficiently pure, a remarkable position in the decades before Robert Koch identified the Vibrio cholerae bacterium.

This is the same process that is believed to produce will-o’-the-wisp lights above swamps. Decaying matter produces methane that catches fire, producing small flickers of light known to lure wanderers to their deaths in swamps.

Gold occurs at 0.01 – 0.1 µg kg⁻¹ in many plant‑based foods and at similar levels in drinking water. A normal diet gives hundreds of nanograms of elemental gold per day, but >95 percent is not absorbed.