What Makes a Mature Science

Mechanism alone cannot make a science credible. It must describe its subject matter in terms of entities, properties, and rules.

Jan Baptist van Helmont (1580–1644) was an astute thinker for his day. It was he, for instance, who coined the term “gas,” which he derived from the Greek word for chaos (χᾰ́ος). But he is better known for an experiment he ran in the early 1600s investigating the growth of plants and trees. He took a 5-pound willow seedling, planted it in 200 pounds of soil, and carefully watered the tree for five years. He then separated the tree from the soil, weighed both, and found that the tree now weighed around 169 pounds while the dry soil weighed 199 pounds and 14 ounces.

This was a powerful demonstration that the weight of the tree did not come from the soil, but van Helmont drew the wrong conclusion: he took this as evidence that the element of water could turn into wood. Despite his work with gases, it never occurred to him that most of the weight might be drawn from substances in the air.

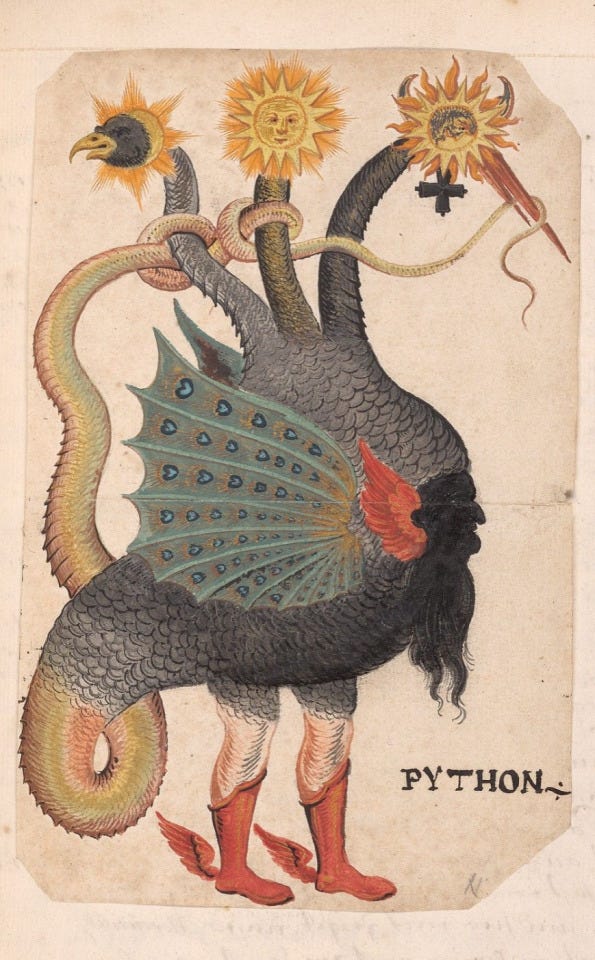

As an alchemist, van Helmont believed other strange ideas as well, like that you can transform basil leaves into live scorpions by grinding them up, placing the ground basil between two bricks, and leaving the whole arrangement to bake in the sun. (You won’t be surprised to learn that this does not replicate.) Here, van Helmont may have actually been a moderate — other alchemists said that the smell of basil could breed scorpions inside your brain1 or that, if you rub a magnet “briskly” with garlic or place it near a diamond, it will lose its force.

The alchemy of van Helmont’s day was not a mature science. But today’s chemistry is. At some point between the 17th and 18th centuries, the study of matter and substance went from being an immature science, a bunch of people hanging around and wearing funny hats, to a mature one, a bunch of scientists hanging around and wearing funny hats. But how exactly does a field of science become mature?

One answer is that a mature science talks about mechanisms.

This is a good start, but it’s often misunderstood. People are perfectly happy to draw one box between two other boxes in a flow chart, and call that a “mechanism.” But while A → Z → B may be slightly more detailed than A → B, it’s still superficial. There is not — contrary to what people sometimes claim — a state change where if you put enough boxes in your flow chart, you suddenly have a real science.

What we need, then, is some way to move beyond generalization; to go from classifying what people see to being able to explain the inner workings. “Mechanistic” is the right direction, but we prefer to say that a science begins maturing when it starts describing its corner of the universe in terms of entities, properties, and rules.

There is a process by which this happens. First, we must begin by asking, what are the nuts and what are the bolts? We must identify the objects or processes that any given branch of science cares about, the hidden bits bouncing around under the hood. These are entities.

Next, we must ask: how do the nuts and bolts differ? Even within nuts and bolts, there is often variation. Perhaps some bolts are longer than others, or some nuts are hexagonal, while others are square or winged. These are properties.

And finally, what laws describe the dynamics of a system, the way it responds to forces, or changes over time? These are rules.

Every reasonably mature science provides answers to these questions. Chemistry, for example, has already gone through the process of delineating its entities and rules. But other sciences are waiting, or even stuck, at different points along the way. Let’s look at two other sciences in particular, each at a different stage of development.

Biology is partway through this process, but its maturity is unevenly distributed: in some areas, it’s fully grown, while in others, we’re still reaching for the concepts we’ll need to see how things truly work.

Psychology is even further behind. The search for the entities of the mind has barely begun, if it has started at all. Psychology aspires to goals like preventing procrastination and addiction, describing the dimensions of personality, and curing conditions like anxiety and depression. The field is going to need mechanistic models to get there. Psychology must have its alchemy-to-chemistry moment to offer any hope at all of understanding what’s happening inside our minds.

Tech Model Universe Club

Thinking mechanistically about your subject means thinking about your section of the world as if you were making it from scratch. When building a model railroad display, you have to begin by placing an order at the hobby store, which requires having a pretty good notion of what parts you’ll need. The parts, whether you’re studying a model railroad, a beetle, or the entire universe, are the entities, the guts of the machine. To understand something, you need to know what it’s made of.

When preparing your order for the hobby store, the list can only be so long. There might be 100, 200, or 5,000 different kinds of parts in a steam engine or a jumbo jet. Even so, you know that the number is finite. This is a weird but profound implication of thinking in terms of entities. We don’t yet know how many kinds of fundamental particles there are in the universe, but we do know that the quantity has some upper bound.

This makes mechanistic thinking different from just offering descriptions, and makes science different from engineering. You can use a large box of Legos to build a nearly infinite number of objects, so the study of Lego kits per se can go on forever. But there is a finite number of Legos in the box, and unless each Lego is unique, there is an even smaller number of types of blocks.

But thinking about entities alone isn’t enough to give you a model — each entity also has properties. For example, a gear is one of the entities you find in an engine. Gears are all the same kind of part, but each gear has different properties: different radii, different numbers of teeth, and so on.

Sometimes the relationship between entities and properties is fixed. Every proton has an electric charge of 160.2176634 zepto coulombs, for example, but charge is still a property of the entity “proton.” Other times, entities of the same kind can have different properties. Two atoms of sulfur can have different numbers of neutrons, making them different isotopes. Properties are the dimensions along which you can measure an entity.

Finally, there are rules. When two entities meet in a dark wood, what happens? A rule describes how entities interact based on their properties. Molecules interact according to universal principles of conservation of energy and momentum. Legos interact in terms of bricks and studs — and short of introducing hot glue to the mix (“high-energy Lego”), Lego bricks combine only in certain ways.

Physics and chemistry, when used to look at a cannonball, will describe it in terms of different entities. To an adherent of Newtonian physics, the cannonball itself is an entity: it’s an object with mass, position, and velocity — properties which evolve as it interacts with other entities (for example, the Earth) according to certain laws.

To a chemist, the cannonball is composed of many tiny entities, atoms, each of which has properties like atomic mass, and which interact according to differing sets of laws. Such an understanding allows us to anticipate what might happen if the cannonball were to come to rest in a vat of H2SO4.

These paradigms have enough overlap to treat them as compatible — for example, mass is a property in both systems, and the conceptions of mass are similar in both. But the paradigms are different enough that they have divergent practical uses. You wouldn’t compose the cannonball in terms of position and velocity if you’re trying to determine whether leaving it out in a storm might cause it to rust; and you wouldn’t compose the cannonball in terms of atoms and chemical interactions when trying to determine where it will land.

Big things that work are made up of little things interacting according to some kinds of rules. To a first approximation, everything that happens in a computer is the result of very simple entities (binary memory and some basic Boolean logic) combined over and over in very complex ways. The combination is complicated, but the basic entities are small in number and relatively simple. It’s hard to get more basic than zeroes and ones and NOT AND XOR.

This is the way that all systems work. Things are made up of smaller, simpler things that follow their own logic. For some reason, we tend to find this counterintuitive — where we haven’t been trained to think mechanically, we usually default to thinking impressionistically.

This is a problem. Without a model, you fall into circular reasoning. This is how psychology ends up with explanations like, “people place greater importance on more recent events because of the recency bias,” or how medicine ends up with explanations like, “you’re in pain because you have fibromyalgia” (Neo-Latin fibro-, Greek μυο- "muscle", Greek άλγος "pain").

But when you have a grasp of the rules, what appears to be chaos reveals an underlying order. Entities follow certain rules and contain certain properties that behave in a logical manner, which makes new kinds of understanding possible. This is why our greatest scientific successes come when we start building models. There's a sharp divide between "impressionistic" research that deals with simple predictions and abstract nouns (merely documenting effects and phenomena) and “mechanical research” that attempts to model what we observe in terms of rules and entities. Both can be useful, but there are some things that impressionistic research simply can't do.

Drunk Cats and Second-Order Effects

Most video games are abstract. This is not the case for Dwarf Fortress, the game with the unofficial slogan, “Losing is fun!”

Ostensibly, Dwarf Fortress is a game about managing a group of dwarves as they establish a colony deep in the wilderness. In practice, Dwarf Fortress is a game about navigating a wild and teeming mechanical universe somewhat like our own. Elaborate, emergent stories unfold from complex systems, but the struggle to survive in its simulated world always ends in the same way: catastrophic failure.

In most video games, bodily harm is abstracted. It’s often abstracted so much that all harm is reduced to fit within a single number: Hit Points, or HP. In Dwarf Fortress, however, injuries get really specific. There are five levels of injury, and a Dwarf (or any other creature) can be injured in body parts ranging from “right lower arm” to “pancreas.”

Despite the intensity of detail, Dwarf Fortress is still more abstract than real life. Dwarves aren’t made out of individual cells; they don’t have DNA (yet), though they do have eyelids. But the fact that Dwarf Fortress is unusually mechanical compared to other video games makes it a good example of the difference.

Dwarf Fortress is complicated enough that no one, not even its creators, can understand the full implications of a simple change. So whenever they release an update with new features, there's always a period of several weeks spent cleaning up tons and tons of bugs. After one of these updates, Tarn Adams, the developer, got a bug report that said, “There’s cat vomit all over my tavern, and there’s a few dead cats. Why? This is broken.”

It turned out that in the latest update, Adams had added taverns. Alcohol would get spilled on the floor, cats would wander in, and because of pre-existing code intended to let dwarves walk around leaving bloody footprints, they would get alcohol on their paws. Then, because of a different bit of code that let cats lick themselves clean, they would lick the alcohol off.

Adams had written the code so that a cat licking itself clean would ingest a “full dose” of whatever they were covered in. But in this case, a full dose was a whole mug of alcohol, and when the code ran through all the blood alcohol size-based calculations for a cat-sized creature, this led to inebriation, vomiting, and even some dead cats.

In an abstract world, cats don’t die from licking beer off their paws. This kind of unforeseen coincidence wouldn’t happen in the first place, and the abstract world isn’t complex enough. It can only happen in a mechanical world, which is why weird stuff like this happens in our world, and also in Dwarf Fortress.

This vomiting cat bug illustrates why most video games are abstract rather than mechanical. Detailed simulation is a lot of work and not very fun. A video game’s developers can never think through all the possible interactions and second- third- and so-on-order- effects, so mechanical design tends to lead to bizarre corner cases. You can see why God stopped releasing updates a few thousand years ago. The resulting bug fixes were too much of a headache.

It is not enough to appeal to entities — to talk of cats, taverns, and dwarfs — and call it “mechanism.” Philo of Byzantium in the 3rd century BC tried this, looking at combustion and explaining it in terms of the action of atoms of fire. He was appealing to hidden entities; so why wasn’t his alchemy a mature science?

For one, his description was too vague to be a model. A model predicts specific consequences. In Dwarf Fortress, any creature with blood can become drunk; in Dwarf Fortress, cats have blood. So, without this consequence being at all foreseen, let alone intentional, in Dwarf Fortress, cats can get drunk.

Drunk cats are a natural and inevitable consequence of how the game was built. But what are the natural and inevitable consequences of Philo’s “atoms of fire”? Our guess is, not much. He saw fire and reasoned something like, “Fire is made of something. What is it made of? Fire atoms, of course!” But we hope you can see why this is barely different from saying that fire is made of fire goblins, or fire spirits, or fire Legos, at least in the absence of more detail.

You can always select a noun, say it’s the entity behind some phenomenon, and claim you found a mechanism. When you see a behavior you don’t understand, you can always attribute it to a new “script,” or attribute chemical reactions to a new “principle” or “essence.” People go to parties more or less because of a hidden quality called “extraversion.” Recruits succeed or fail at becoming Marines thanks to a hidden quality called “grit.” Opium causes sleep because it contains a “somniferous property.”

There’s an obvious problem with these claims: they are circular. But a second shortcoming is that this approach only ever gives you a laundry list: you can always add more to it. Thinking in entities should force you to narrow your list. In any machine, there are only so many kinds of nuts and bolts.

Like Sun Tzu said:

There are not more than five musical notes, yet the combinations of these five give rise to more melodies than can ever be heard. There are not more than five primary colors (blue, yellow, red, white, and black), yet in combination they produce more hues than can ever be seen. There are not more than five cardinal tastes (sour, acrid, salt, sweet, bitter), yet combinations of them yield more flavors than can ever be tasted. In battle, there are not more than two methods of attack: the direct and the indirect; yet these two in combination give rise to an endless series of maneuvers.

Chemistry first revealed that all naturally occurring matter on Earth is composed of just 94 elements, a finite list only slightly complicated by the eventual creation of a small number of synthetic elements, bringing the total to 118. That’s far more than the four classical elements, but it’s a surprisingly small number of entities to account for all the matter we interact with on Earth and the majority of the (visible, plausible) matter in the universe.

But we eventually found that these 118 elements were themselves merely different combinations of just three even more basic entities: protons, neutrons, and electrons. So much variation arose from such a small subset of entities! Chemistry never would have gotten there if they had continued to chase after surface-level descriptions of magnets, heat, and air. The only way to find the elements was to start building models of what was happening under the surface.

Biology

Early biologists were little more than naturalists. Their subject matter was the different species of plants and animals, and their methods were description and classification. But “species” did not serve as a good scientific entity, as people continue to discover to this very day. There are no objective criteria for telling the boundaries between species, and, as a result, the boundaries change all the time. A mature science, this was not.

In 1665, Robert Hooke looked at a slice of cork through his microscope and found that it was made up of little chambers. “Our Microscope informs us that the substance of Cork is altogether fill'd with Air,” he wrote, “perfectly enclosed in little Boxes or Cells distinct from one another.” By the 1680s, Antonie van Leeuwenhoek expanded our knowledge of this invisible world, finding tiny “animalcules” living in rainwater, horse urine, and on his own teeth, using a microscope of his own design.

The discovery of cells and microbes seem to signal an obvious entities-and-rules moment for biology. They provide a very neat, literal answer to “What are animals and plants made of?” They are made of cells, which interact according to cell rules.

That said, there are reasons to believe these are still not the entities and rules we are looking for. While all living things are made up of cells, cells themselves cannot be cleanly enumerated. While there are distinct types, there’s as yet no finite set, and certainly no objective list, in contrast with the periodic table of elements in chemistry. You can count the total cells of an organism (~37 trillion in a human), but cell types are context-dependent, fuzzy at boundaries, and variable by classification — a lot like species, actually.

In 1859, with the publication of On the Origin of Species, it seemed Charles Darwin offered a more precise explanatory mechanism for biology: evolution. Its explanatory power wasn’t even reliant on DNA. Darwin didn’t know about DNA during his lifetime (it wasn’t actually “seen” until 1869, when Friedrich Miescher extracted strands of DNA from the pus on soldiers’ bandages), and he didn’t need to. The theory of evolution is much simpler and more profound. Any kind of replication with natural selection will lead to the accumulation of adaptations over time. It doesn’t matter what’s being copied — genes, code, memes — as long as there’s variation and selection, evolution kicks in. It's not about chromosomes, it’s about the internal logic of selection itself.

The problem, however, is that this logic makes the entities and rules much less concrete: entities are any kind of organisms with variation. And even if the rules are the simple math of selection, all organisms are subject to selection. Darwinian entities and rules — entities with variation — is thus far too large a set to organize biology.

So what else is enumerable in biology?

Mendelian genetics in the early 20th century gave us another candidate. Even if an understanding of DNA wasn't necessary to come up with the theory of evolution, genes and their underlying pieces are very mechanistic. Genes, proteins, and nucleotides are much less ambiguous than cells.

There are only 5 nucleotide bases behind all life: adenine (A), cytosine (C), guanine (G) and thymine (T) in DNA, and uracil (U) taking the place of thymine in RNA. While there are over 500 amino acids in nature, only 22 α-amino acids appear in the code of life, incorporated into proteins.2

Recent developments in our understanding of cancer underscore the usefulness of genetic code as biological entities. For years, we’ve sorted cancers by where they appear: breast, colon, lung, etc. You would probably still find it natural to talk about brain cancer or pancreatic cancer. But as scientists gain more understanding of the inner workings of cancer cells, they’ve started re-classifying cancers based on the genetic mutations that caused them (e.g., BRAF V600) instead of their starting location. Instead of saying, “You have lung cancer,” a doctor might now say, “You have cancer with this specific genetic mutation, and it happens to be in your lung.”

It turns out that trying to understand cancer cells in terms of “what part of the cell is malfunctioning?” is a better approach than trying to understand the cells in terms of “what organ did it start in?” Not all cancerous cells that come from the lungs are cancerous for the same reason, just like not all motorcycles in the junkyard are junked for the same reason. Some of them you can take home and fix; others are scrap.

How a tumor responds to treatment usually has more to do with the mutations that caused it than with what part of the body happened to house the cells that developed those mutations. So two patients with “breast cancer” might benefit from very different treatments, while two patients with tumors in different parts of the body might nevertheless benefit from the same treatment. As long as you’re only looking at the superficial features of the cancer, you’re fated to be confused, whereas if you look at its genetic composition, you will learn how it works.

Successes like our advancing understanding of cancer make biology look mature. It’s hard to imagine a more textbook case of moving past the surface level and thinking in terms of pieces. The hope is that the same thing will soon happen for lots of diagnoses. There are signs that what we currently call “rheumatoid arthritis” may turn out to be more than one disease. Better genetic testing, like the testing that reshaped cancer diagnosis, may help make this clear.

There are other steps that might similarly advance biology into having the mechanical and predictive power of truly mature sciences, namely efforts to devise better tools and find principles free from exception.

Consider the invention of the telescope: astronomy could only advance so far until more precise tools allowed for closer observation of distant objects. The naked eye is ok, but if you invent the telescope, Galileo is soon using trigonometry to estimate the size of mountains on the moon, and launching astronomy as a mature scientific discipline.

In biology, microscopes, fluorescent DNA dyes, ultrasound techniques, and anything else that allows scientists to better characterize the nuts and bolts of the field have advanced our understanding significantly beyond the discipline’s alchemy days. Tools like genetic sequencing made it possible to diagnose cancer based on mutations, rather than location, and made targeting of genetic diseases possible. We’ve known about base pairs, RNA, and DNA for decades, but we couldn't make full use of them because our tools were so poor. We need to help the Spinozas of bioinformatics grind out new lenses.

Beyond better tooling, biology would benefit greatly from more efforts to find true organizing principles and patterns. Currently, every rule has exceptions, and the exceptions have more exceptions, as far as the eye can see.

This resembles the time when astronomers thought the Earth was the center of the universe. They had some workable concepts — planet, star, comet — but everything was sort of backwards, and remained that way until the adoption of the heliocentric model. We’re looking for a reorganization that will give biologists real principles and free them from the exceptions.

This has been extremely difficult to do. In molecular biology, for example, scientists believed for many years that genetic information flows in a single direction, from DNA to RNA to protein. In this view, proteins are the functional products, DNA provides the master instructions, and RNA generates a temporary working copy.3 But there are more and more exceptions to this “rule” — including an enzyme called reverse transcriptase, which makes DNA from an RNA template.

And yet, while efforts to uncover mechanisms and find organizing principles are important, it might be difficult to reduce complexity down enough to hew a mature science from biology. Perhaps “biology” is too big of a tent to expect that everyone inside will benefit from the same toolkit. For some problems, like infectious disease, it’s obviously helpful to think in terms of cells. For others, like gene therapy, focusing on nucleotides and amino acids holds more promise. And for yet others, thinking in the broader terms of evolution, in almost a pure information theory way, suits best — who cares how the units of selection are encoded?

Psychology

Even more than biology, psychology remains in its alchemy phase. Psychology today is almost 100 percent impressionistic — nothing is understood in terms of anything like a blueprint, and no one can point to the nuts and bolts. While psychologists are clever at naming things, these names are usually nothing more than abstract nouns; at best, they merely document effects and phenomena, without explaining them. As our friend Adam once put it:

The problem with this approach is that it gets you tangled up in things that don’t actually exist. What is “zest for life”? It is literally “how you respond to the Zest for Life Scale”. And what does the Zest for Life Scale measure? It measures … zest for life. If you push hard enough on any psychological abstraction, you will eventually find a tautology like this.

It’s useful to notice that “chunking” information makes it easier to remember; it jumps out at us from everyday cases like phone numbers. Which is easier to remember, 348-428-2328 or 3484282328?

It’s likewise interesting to notice that damage to the hippocampus sometimes makes it so that people forget new experiences within just a few minutes, even though they can still remember old information. It’s even productive to speculate that this means there are functional categories like “short-term memory” and “long-term memory.” But noticing all these things isn’t the same as knowing how memory is actually stored.

It’s intriguing to notice that when you ask people to rate themselves (or others) on large lists of adjectives, and then you run a factor analysis on those ratings from lots of people, you often end up with a five-factor solution. And it's even reasonable to give these clusters names like Conscientiousness, Extraversion, Agreeableness, Neuroticism, and Openness to Experience, and to call them, “the Big Five.”

But this isn’t the same as knowing why some people are more agreeable and some people are less agreeable. There must be some knob, switch, or parameter in the mind that causes the surface differences we call “agreeableness.” But we don’t know what that entity is, and there’s no theory behind it. And it’s not going to be an actual knob, switch, or parameter; it will be a difference in some other entity or some property of an entity, once we figure out which entities are involved.

To become a mature science and have its alchemy-to-chemistry moment, psychology would need to figure out a set of rules and entities that it can use to model its findings, so psychologists could start building physical models and doing mechanical research.

It can sound corny, even dim, to talk about “finding the periodic table for psychology.” But at the end of the day, minds are made up of something. Maybe they’re made up of about 118 things, like the chemical elements. Maybe they can be reduced to only about three things, like the jumpscare we got when we discovered atomic physics. Or maybe they cannot be reduced below 10,000 ingredients. We don’t know for sure, but the stew upstairs is made of some set of identifiable ingredients, and it’s reasonable to ask — what’s cooking?

There have been a few proposals for how to devise an entities-and-rules-based framework for psychology, from the admittedly goofy to the mechanically respectable.

Let’s begin with the goofiest — our first example is The Sims. We can hear some readers objecting that The Sims is not a scientific model. Label them however you want, but The Sims and its descendants are, in fact, models for human behavior, and that makes them a psychological model, like it or not. So are other video games that try to mimic the inputs and outputs of real minds.

Sims keep themselves fed, clean, and entertained, and if you watch them for a while, you might forget that they are artificial. They’re missing many things, like our language abilities — for at the beginning of time, Will Wright cursed them to only speak Simlish — but they provide a decent facsimile of human behavior.

The first test is whether the model can mimic its target subjects, even roughly. But better than mimicry is replacement — anything that can actually serve as a substitute for human mental tasks makes a strong case for itself as a model.

Sims can’t replace human mental labor, so it’s time for a less goofy example of a framework: computers. Digital computers are an interesting false start. From the days of the simple calculator, they have been able to substitute for human mental tasks, and they’re much more effective at some tasks than we are: try it, what is 3482 * 9192?

In theory, the digital computer can run any algorithm, so whatever algorithms are behind our minds could in principle run on a computer. But the average computer program does not behave much like a human being, or any other animal. ELIZA tricked many people with her pattern matching, but overall she didn’t act like people do.

By now, many of you are shouting at your screens about deep learning and artificial neural networks. That’s the next proposal to consider. When you look at what deep learning and large language models can do today, it's hard not to notice some eerie similarities to how humans and animals behave. Image classifiers can pick out birds and stop signs about as well as many people can; large language models like ChatGPT can read and write about as well as many people can; self-driving cars can drive better than many people can, and so on.

Models in this tradition boil down to just a few basic building blocks — neurons and connections — running on straightforward rules like backpropagation. Sure, the architectures can get complex, but the foundation is simple. They seem promising candidates for entities with properties and rules.

Still, there’s reason to be cautious about treating neural networks as literal models of the mind. For all the “neural” branding, these systems don’t mimic real neurons all that well. In some areas, neural networks have stalled out. They got very good at StarCraft 2 up to 2019, but still haven’t transcended the best human players.

But the deeper issue is that neural nets are incredibly powerful at approximating functions. Just because a neural network pulls off something that looks like human behavior, it doesn’t mean that humans do it the same way. Neural networks can model a wide range of functions, but this doesn't imply that the brain uses similar mechanisms.

Early steam engines were moody and labor-intensive, prone to speeding up or slowing down without warning. Keeping them steady required constant human attention. Operators had to manually tweak valves, monitor pressure, and steady the engine by hand. Failure to do so meant the engine could lag, choke, or, in extreme cases, explode.

That changed with the invention of the flyball governor, a clever bit of engineering introduced by James Watt in the late 18th century. Using a pair of spinning metal balls that rose and fell with engine speed, the device could automatically throttle steam flow, stabilizing engine speed without human touch. It was one of the first automatic control systems in industrial history, and it marked a turning point: engineers could sit back and read the newspaper, while the engine carried out critical adjustments all on its own.

Control systems increasingly substitute for human judgment and attention. Keeping a large house warm in the winter and cool in the summer is a task that once required a team of servants. Now it’s handled by a simple feedback loop, a thermostat. Humidity in greenhouses was once handled manually. Now it’s at least partially automatic. When driving, you used to have to manage the speed of the car yourself! Today, you can let cruise control do it for you.

Negative feedback loops, also called “control systems” or “governors,” as in Watt’s flyball governor, regularly replace human mental tasks. When organized hierarchically, even simple control systems look and act a lot like biological intelligences, as seen in The Senster. They can substitute for our functions; they are convincing and effective, which is a sign that they may be a good model for at least some of our psychology.4

Don’t listen to the haters who say that psychology is hopelessly nebulous. There are fundamental questions in psychology.

We experience things and store parts of what happens as memory, so “how does memory work?” seems like a fundamental question. Those memories are stored somehow and in some format, and eventually, someone will discover the details.

We are driven to keep warm, to eat salt, and for unfathomable reasons, many of us are driven to play Candy Crush Saga for hours on end. These behaviors all happen for a reason — even if not a good one. The drives appear to be distinct, so there must be a list. “How many drives are there?” seems like a fundamental question.

When you conceive of the drives as control systems, you invite other questions. We have a drive to eat salt, but what signal is being controlled? What is the set point of the salt governor? What are the set points for the drives for safety, status, or warmth? And what governors are behind behaviors like hobby tunneling?

When we look at some part of psychology — a behavior, an utterance, a tendency towards road rage — we have to be able to explain what we observe in terms of something. Something is happening under the hood, some kinds of gears are turning — except they’re not gears, and nobody yet knows what they are.

The same is true of biology. Life is made up of little machines, and in this case, we know something about them. DNA is code, RNA is messages, the mitochondria are the powerhouses of the cell. We’ve come a long way from rubbing magnets “briskly” with garlic. That path led through absurd but passingly mechanical questions like “can water turn into wood?” to better questions like, “when a tree increases in weight, it must be gaining mass by adding new matter to its form. And if the new mass is not coming from the soil, it must be coming from somewhere else. Where are these new atoms drawn from?”

Someone has to pose these questions and frame them in a way that makes them potentially solvable. And when they do, it’s up to all of us to try to answer them.

Slime Mold Time Mold is a mad science hive mind with a blog. They are making waffles for breakfast. They are two children playing in a stream. You can read their blog at slimemoldtimemold.com and follow them on X at @mold_time.

Cite: Slime Mold Time Mold. “What Makes a Mature Science.” Asimov Press (2025). https://doi.org/10.62211/26jw-08ok

Lead image by Ella Watkins-Dulaney.

“There is a property in Basil to propagate Scorpions, and that by the smell thereof they are bred in the brains of men, is much advanced by Hollerius, who found this Insect in the brains of a man that delighted much in this smell,” Sir Thomas Browne, Pseudodoxia Epidemica. In any case, be careful the next time you are having pesto.

The other 478+ are considered “non-canonical,” basically fanfiction aminos, so we assume that they are used extensively in God’s omake.

The Central Dogma — as originally described by Francis Crick — has never been violated. All that Crick said is that “Once information has got into a protein it can’t get out again.” He never argued that RNA could not be reversed into DNA; that was simply a belief wrongly held by many biologists.

This idea is old, but it’s still underrated, which is why we wrote a series making the case for this perspective in more detail.

I enjoyed your article a lot! My husband and I often have conversations in this line when we talk work (he's an engineer and I'm an ecologist) that end up with him complaining about how frustrating my field is because of all the exceptions... I will admit, exploring all the rules and their exceptions is what makes biology so fascinating. There's still so much we simply don't know, like you said. Lots of hunting for nuts and bolts and how they work

Thanks for this very interesting piece. Behavior Analysis, the underlying philosophy of which is Radical Behaviorism, is the alchemy-to-chemistry revolution the rest of Psychology has yet to embrace. It's often misunderstood, misrepresented, and dismissed -- so I'm not surprised you haven't heard of it-- but we are a natural science, and we have discovered some of the basic processes, like elements or colors, you mention. Reinforcement, punishment, generalization, matching law, behavioral momentum... We are far from mainstream, and our concepts and principles are often taken and watered down by other professionals (who haven't spent years studying, practicing, and researching from a behavioral science lens), but we do exist, and we've done a LOT of good, for many populations. I have many recommended readings, but, based on the things you said about psychology herein, B.F. Skinner's "Operational Analysis of Psychological Terms" might be an interesting place to start.